See Through Sound

A Project in AWS - Meta XR Hackathon, 2023

Summary

This project is a Mixed Reality Application for Quest 3. For mixed reality, one of the most important features is spatial information. We have considered how to transform the information that used to be on the 2D plane of screens into 3D. There will surely be a revolution towards the three-dimensional information age. In this upcoming age, sound might be able to be visualized and shared with different people based on visual cues, allowing information to be passed down in an attractive and interactive way. Especially for visually impaired people, there should be a way of transforming sound information into visual information in space.

Together with three software engineers, we developed an innovative spatial annotation tool that allows for context-rich annotations in space, transforming the environment into an interactive canvas for expression and communication. Users can leave oral messages to an anchor and place them in space for others to appreciate. I took on the role of user experience design and graphic design in this project.

Design Question

How might we design an intuitive interface for a spatial annotation tool that effectively translates sound information into visual cues for a mixed reality environment, catering to both general users and individuals with aural impairments?

Users:

1) General users: Leave a message to the other people and learn about the information in space.

2) Individuals with aural impairments: Appreciate the information left by the other people and learn about the information in space.

Main Functions

Voice Message

The users can choose a object and leave a voice message to the anchor.

Stick the Object

The users can leave the anchor in the space by sticking it to the spacial mesh.

Draw with Hands

The users can pick up a pen and draw in the space.

Throw the Object

The users can pickup the anchor and throw it to anywhere in the space.

I came up with multiple functions and especially some artistic expressions to bridge the gap between aurally impaired people and the general users, focusing on how to transfer the information between sounds and visuals through MR interaction. We talked as a team together and found artistic expression maybe a bonus, but the spacial anchor is something fundmental and most basic behind all fancy stuffs. So, we decided to put our focus on the spacial anchoring tool in the limited time range.

Also, we prioritised the 4 functions and decided to realise the functions of voice message and sticking the object first.

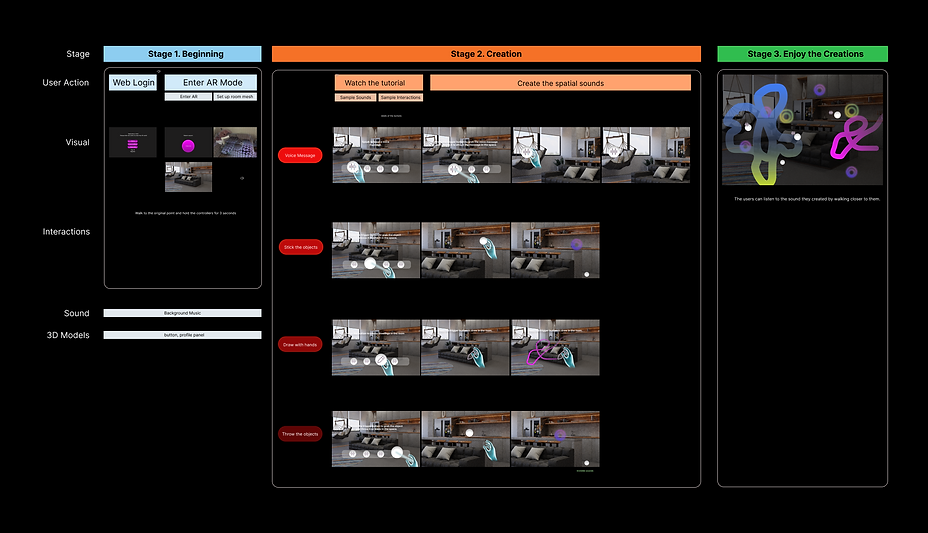

User Experience Journey

I made the user journey map based on the prioritised functions, so the developers can have a template to refer to.

User Interface

I generated the animated UI on Spline

Video